Reprise

Main Contributions

Reprise was my Senior project that was developed in the Unreal Engine. I worked on a team with 2 other Programmers, 2 Designers, 2 Sound Designers, and 6 Artists at DigiPen. My main roles on Reprise were Producer and Audio Programmer. I was responsible for everything related to Audio and the technology needed. My main contributions are the Orchestrator, Audio-Reactive algorithms and elements, custom Wwise Blueprints, and various elements of gameplay such as the final boss.

Note: The information for this project has not been updated since late 2017 during the Prototype phases, and does not represent my full contributions, nor does it portray the finalized art assets and appearance of the game.

Orchestrator

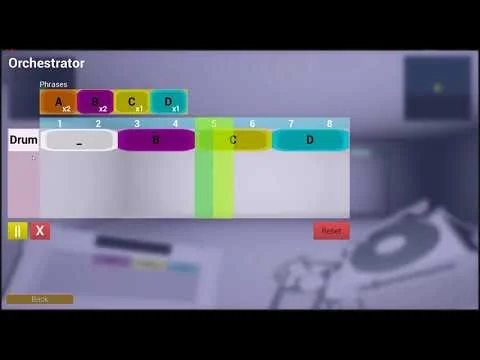

The Orchestrator is an in game user-customizable sequencer that allows the player to place earned Phrases in any order to create their own loop. I've taken inspiration for features from other DAWs, but have "gamified" most of it to fit better with the game and be understandable to those not instinctively familiar with how DAWs work.

The Orchestrator system utilizes Wwise, UMG, and custom sequencer logic.

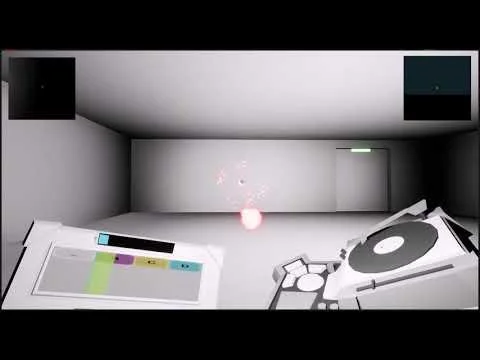

Audio-Reactive

The Audio-Reactive elements of Reprise are mainly possible due to the custom Wwise Blueprints I made to allow us to get needed data at runtime. Each instrument has it's own custom detection algorithm to allow us to more precisely react to the audio.

I've implemented these systems to allow my team to just simple check if that instrument is active that frame, and on certain instruments that sustain like the Synth, it also gives a range of power above the threshold to be used for damage data.

Wwise Blueprints

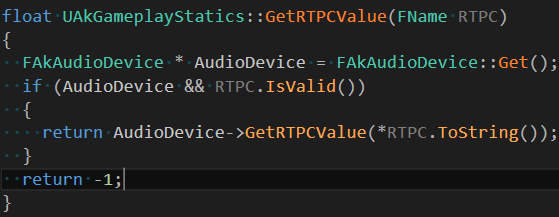

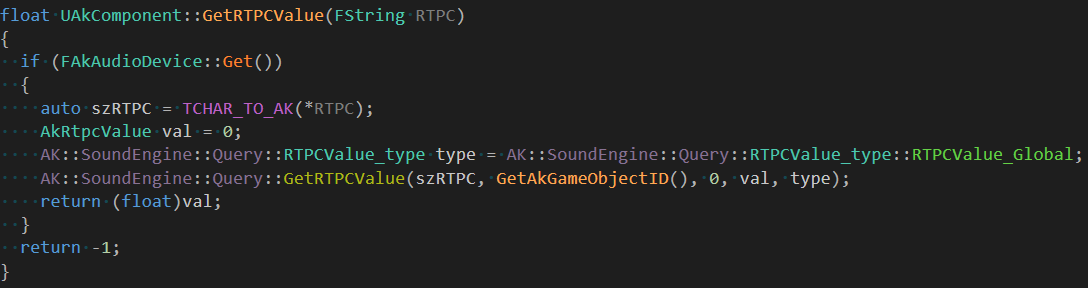

So far I've had to implement the Query part of Wwise into the Plugin. I've implemented the GetRTPC function and also the SetMultiPosition function to allow our ambience to be efficiently played from multiple sources.

I only extend the plugin as we need features, then utilize those Blueprint Nodes to make tools in editor for my team.

Note: The version of Unreal and the Wwise Plugin for this project was at a time where most functionality did not exist built in yet, as those versions came years later.

To view development progress videos from Prototype to Beta:

Orchestrator

The Orchestrator has been a very fun and interesting project. I wanted it to have the appearance and primary functionality of a normal DAW, without actually being a DAW. The current state of the Orchestrator is the product of multiple iteration and design changes to better suit the game and UX.

The following is a full feature list of the Orchestrator UI which I developed with UMG:

Play / Pause, Stop

Scrubbing to each Phrase

Drag and Drop Phrases from Inventory to Instrument Slot

Reorganize placed Phrases in the sequencer by dragging and dropping on each other

Replacing a placed Phrase automatically puts the replaced Phrase back into the inventory

Drag a Phrase into your inventory or off screen to remove it from the sequencer

Right click to remove Phrase

Play Cursor with progress of current Phrase

Reset sequencer

Originally, as you can see in the videos of development at the bottom of this page, I had the Orchestrator display every bar. The play cursor also tracked by bar, instead of the given phrase. This is an example of where I was making it a bit too much like a DAW. Realistically, players don't need that information; they just need to know which section of phrases is playing, and the current progress of those phrases. So I changed the sequencer to display numbered phrases instead of bars, and the play cursor was expanded to the size of a phrase. By design, we know each phrase is only ever going to be exactly 2 bars, so for us as developers that's important, but the Player just views it as a phrase.

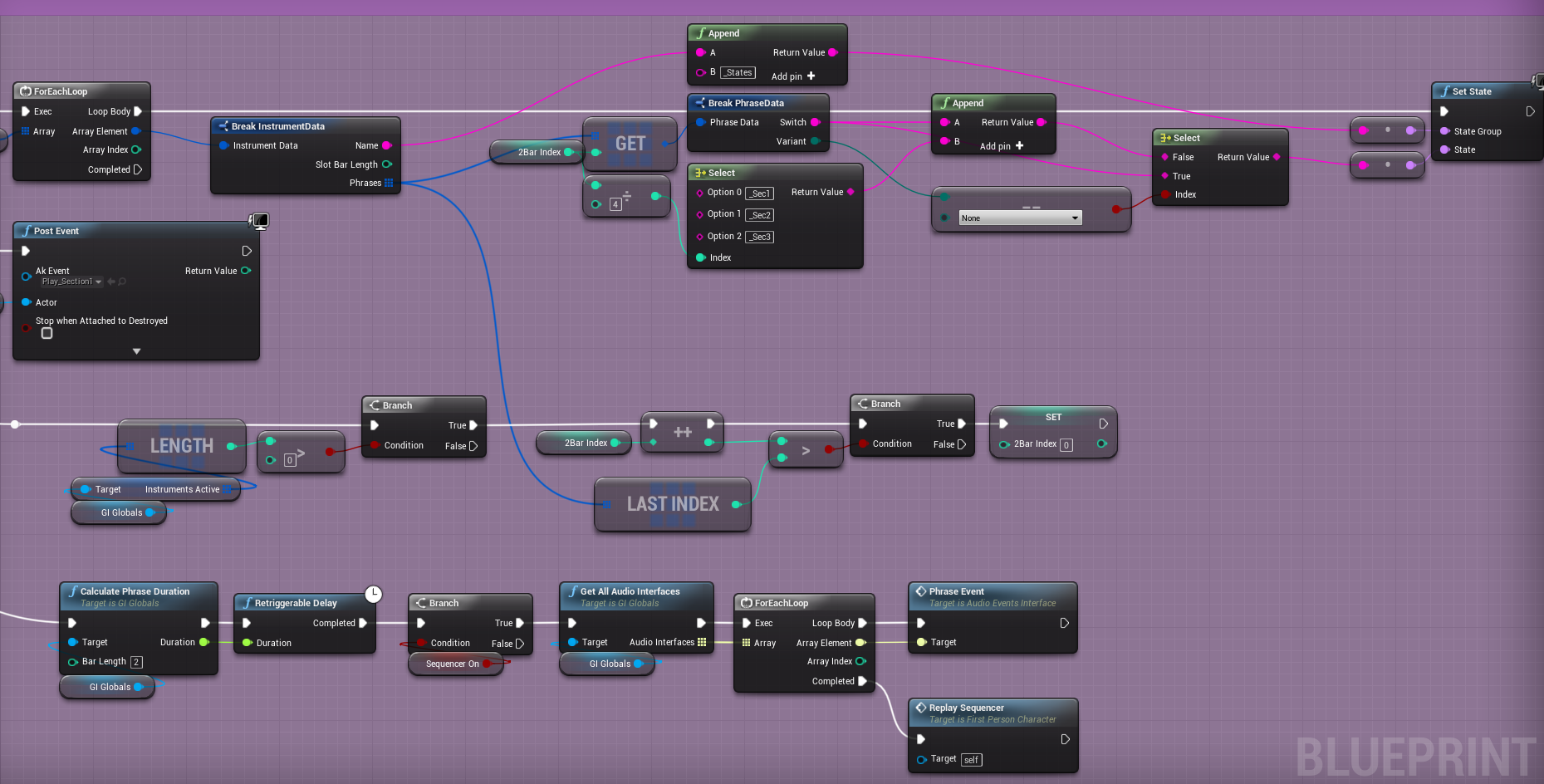

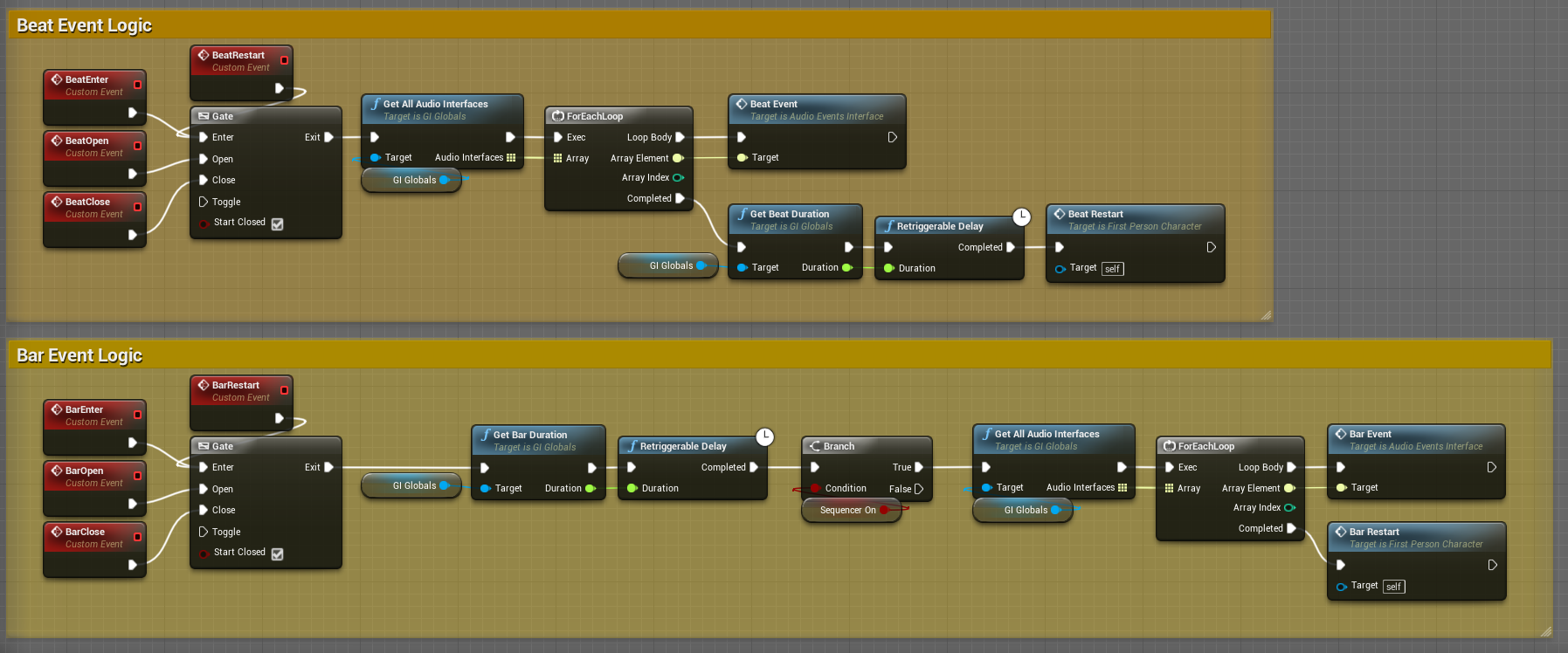

Here is a glimpse of what's going on under the hood:

I won't go into too much detail of everything I have done, but I will go over and explain the main concepts of what I've implemented.

The above snippet is the sequencer at it's core. Every 2 bars in time it sets the appropriate states in Wwise based on the next segment of phrases. The states names are created on the spot, comprised of data of the instrument, phrase variant, and current section of the Orchestrator. After the states are set, it posts the corresponding Wwise event, prepares for the next step in the Orchestrator, sends out a Phrase event, and then repeats until stopped.

All of this logic is setup in a sequence. Now, this is all that is needed for the sequencer to play. In game, this is what happens when the player shoots, however, a lot of different additional logic is needed for the design-time UI version of the Orchestrator.

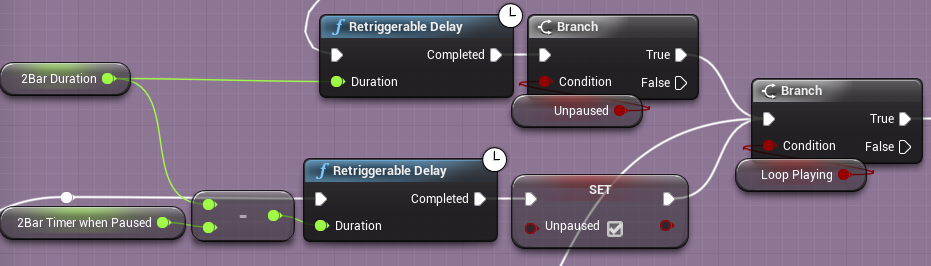

The UI version has 2 separate ways it can be started. Since I allow the user to pause, I need to track the current time through the segment. So, depending on the state of the Orchestrator UI, it will either finish the duration since pause, or play from start for the full duration of the phrase. The "Unpaused" and "Loop Playing" variables are state trackers for other UI functionality.

There is a lot more to this system, especially all of the UMG code for all of the UI functionality, but this showcases how I structured the base of the sequencer in Unreal to control Wwise.

Note from the future: Oh boy, all of the delays used in this system 😅

Audio-Reactive

The Gun, Environment, UI, and Enemies are all planned to react to the music of the game in real-time. The gun is the primary audio-reactive focus, as it is the main mechanic of the game. Currently the environment and UI have some audio-reactive elements, and the intention is that enemies will only react to the beat and tempo.

Unlike my previous game, Suara, I am controlling all of the beat / bar events on engine-side instead of relying on Wwise callbacks. This allows me to keep everything in sync with the Orchestrator as that is also a custom sequencer, so I didn't want Wwise and Unreal used as 2 separate areas of audio sync.

The Beat and Bar events are controlled along with the main sequencer controller to ensure everything is synced up. Other Blueprints can subscribe to listen to these Audio Events, of which there are currently Beat, Bar, Phrase, and plan to have Orchestrator Start / Stop.

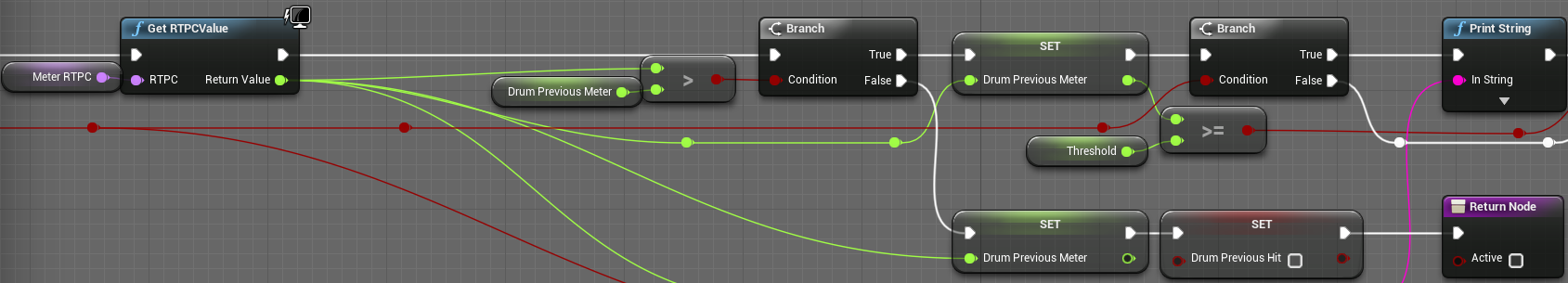

Another area for audio-reactive data is where I'm using my added GetRTPC function for metering the instruments.

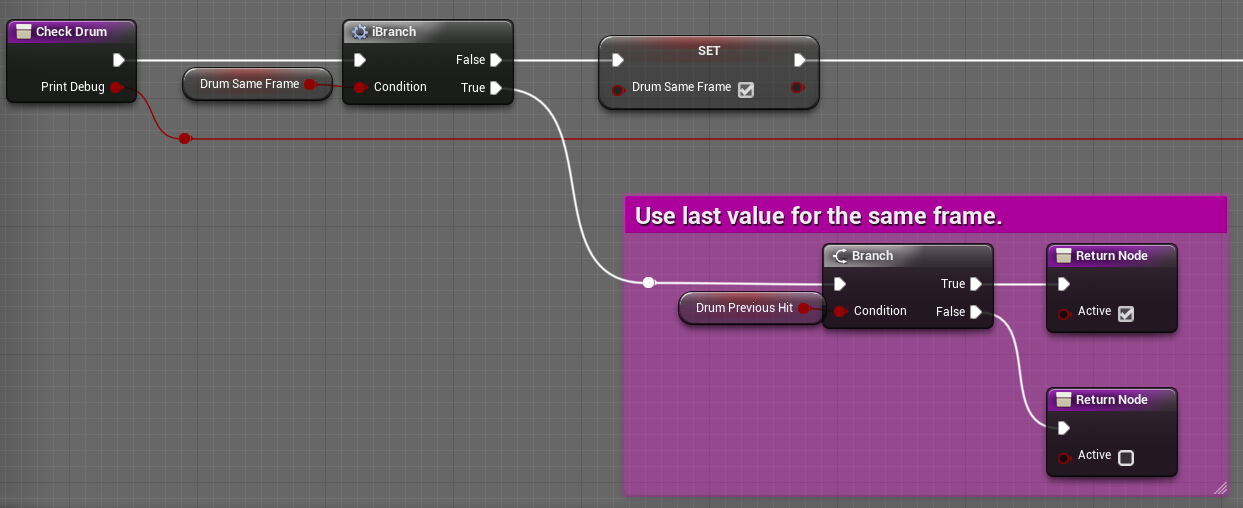

This is a portion of the function that checks for when the Drum is active and what counts as a hit. Since I have a separate algorithm per instrument, it allows me to assume certain things about the nature of the data. Metering in Wwise only get's me amplitudes, which I take via RMS. For drums, since I know it is percussive, I can assume there will never be back to back hits, and that a hit will occur as values quickly increase which will then quickly decrease. I can then make sure the ramp-up is above a certain threshold so it won't trigger so early, or get false positives.

There is a bit of extra logic for the drum based on other assumptions that is not showcased here. However, to make sure all elements that check if the drum is active that frame, I make sure to just use the same value for all calls in the same frame.

Currently, we have 5 unique weapons: Pistol, Shotgun, Laser, Rocket, and Homing Missile. These are tied to the following instruments respectively: Hi-Hat, Drum, Synth, Bass, Toms.

To make the music the player makes a little more interesting, the Bass has some Lead Guitar mixed, and the Toms also end in a Crash.

Each individual instrument has their own respective metering function that my team is able to check for logic.

For example, when my "CheckHomingMissile" function is used, it returns bools as "Lock On" if Toms are active, and "Shoot" if Crash is active, as the crash is meant to shoot all targets you locked on to from each tom. All of the audio-reactive data is hidden behind functions that are used directly in gameplay for my team.

Wwise Blueprints

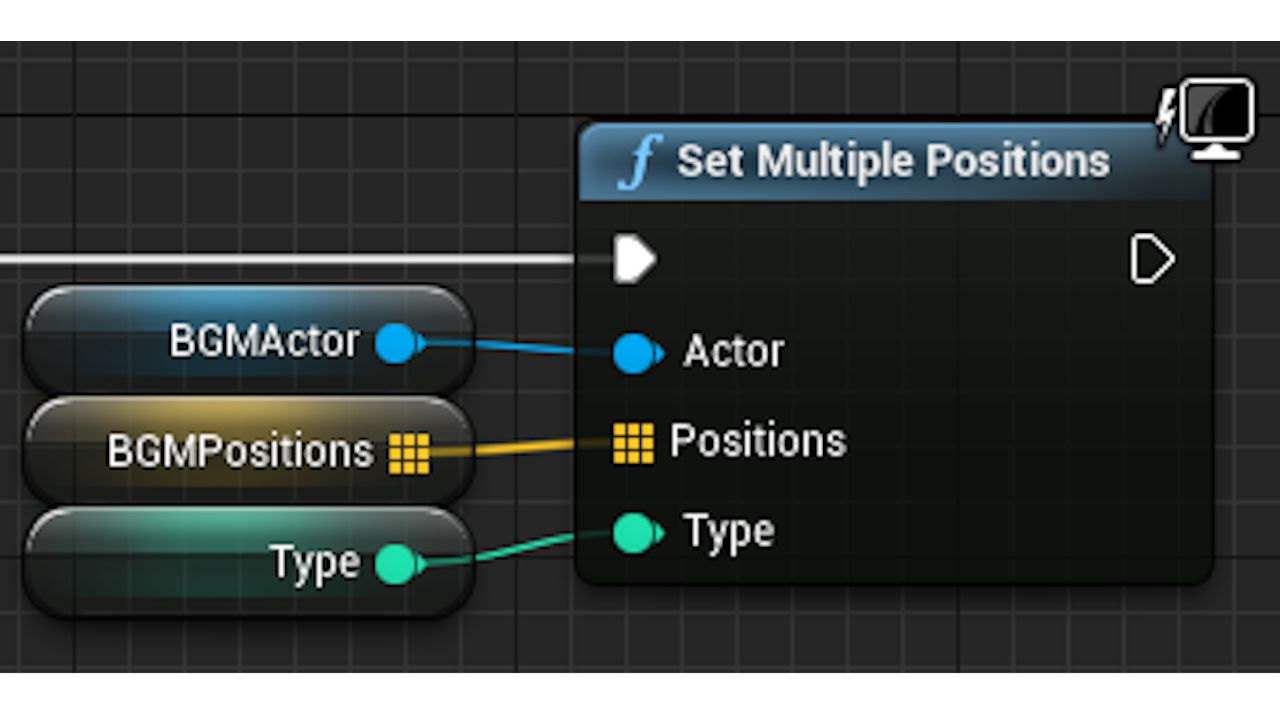

With the default Wwise Unreal Plugin being very bare-bones, I've had to implement a couple of custom functions into the plugin, and expose them as Blueprints for use in editor.

The core of Reprise is built on reacting to audio, so I needed the GetRTPC functionality in order to query Wwise for any of that data. I also realized there was no way to efficiently play a sound from multiple locations at once, so I also implemented the SetMultiplePositions functionality.

Fortunately, from having done a custom implementation of Wwise in Suara as a custom engine, I was able to learn my way around how the plugin turned everything into Blueprints, and implement my functions in a similar fashion.

The code is pretty straight forward, as I just had to define the functions correctly for them to be used in Blueprints. This shows 2 of the 3 files I had to edit (AkAudioDevice, AkGameplayStats, AkComponent) for the functionality to work as intended. For GetRTPC in particular, I also needed to add the Wwise Query headers in order to have access to those functions.

Development Progress Videos

Initial Development

The following is a playlist of videos showcasing the process of development. These videos are mainly showcasing the Audio-Reactive Gun and Elements of the game.